Neural Radiance Fields for Outdoor Scene Relighting

Abstract

Photorealistic editing of outdoor scenes from photographs requires a profound understanding of the image formation process and an accurate estimation of the scene geometry, reflectance and illumination. A delicate manipulation of the lighting can then be performed while keeping the scene albedo and geometry unaltered. We present NeRF-OSR, i.e., the first approach for outdoor scene relighting based on neural radiance fields. In contrast to the prior art, our technique allows simultaneous editing of both scene illumination and camera viewpoint using only a collection of outdoor photos shot in uncontrolled settings. Moreover, it enables direct control over the scene illumination, as defined through a spherical harmonics model. It also includes a dedicated network for shadow reproduction, which is crucial for high-quality outdoor scene relighting. To evaluate the proposed method, we collect a new benchmark dataset of several outdoor sites, where each site is photographed from multiple viewpoints and at different timings. For each timing, a 360° environment map is captured together with a colour-calibration chequerboard to allow accurate numerical evaluations on real data against ground truth. Comparisons against state of the art show that NeRF-OSR enables controllable light and viewpoint editing at higher quality and with realistic self-shadowing reproduction. Our method and the dataset are publicly available in the Downloads section below.

Method

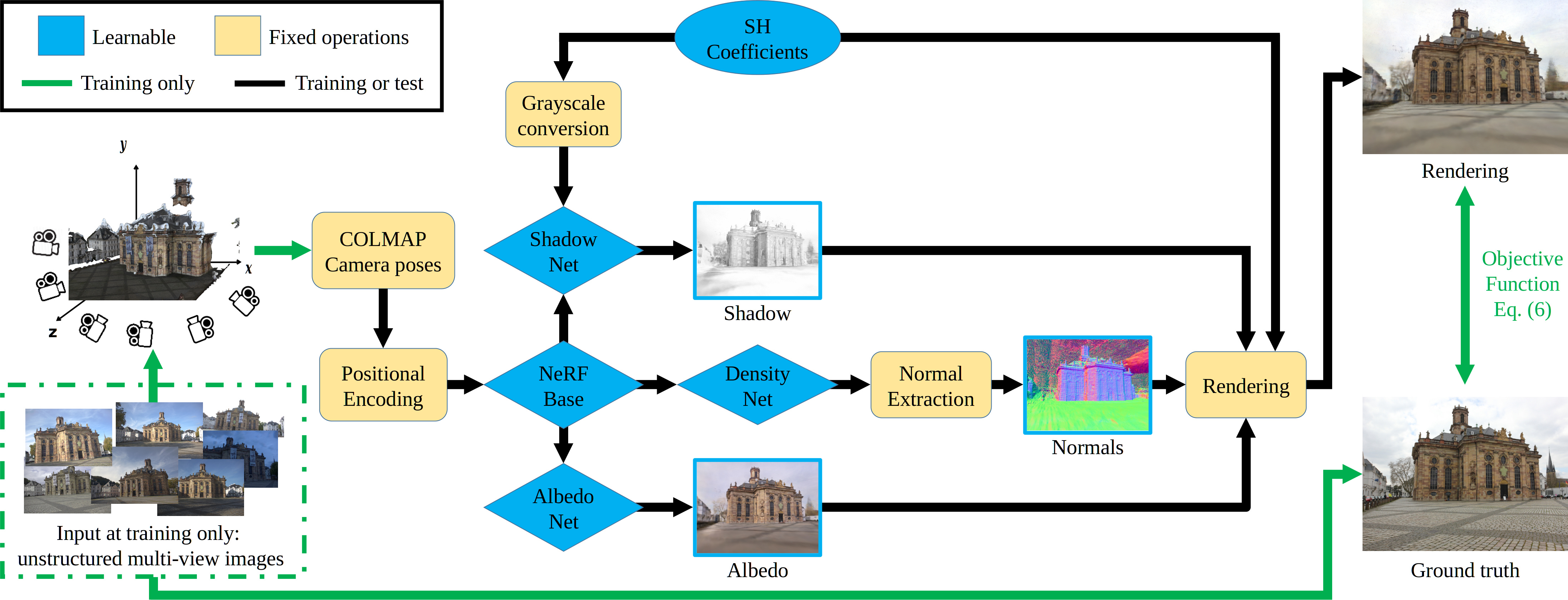

Figure 2: Our NeRF-OSR uses outdoor images of a site photographed in an uncontrolled setting (dashed green) to recover a relightable implicit scene model. It learns the scene intrinsics and illumination as expressed by the spherical harmonics (SH) coefficients. Here, a dedicated neural component learns shadows. During the test, our technique can synthesise novel images at arbitrary camera viewpoints and scene illumination; the user directly supplies the desired camera pose and the scene illumination, either from an environment map or directly via SH coefficients.

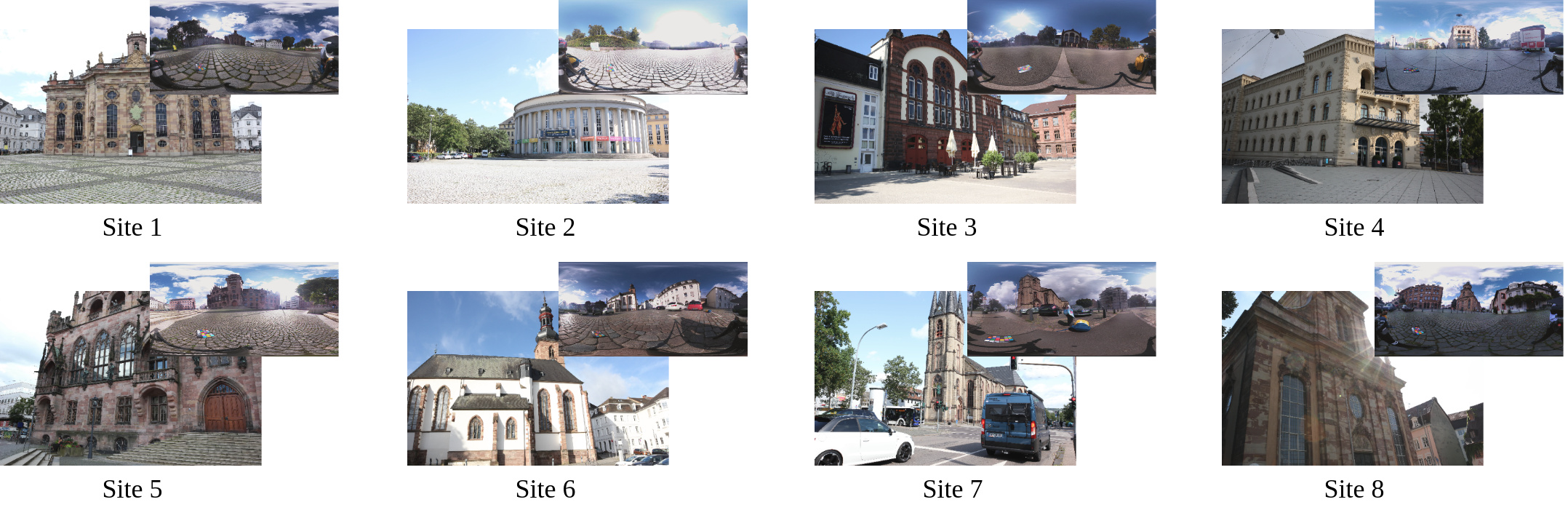

Dataset

Figure 3: Sample views from the new benchmark dataset for outdoor scene relighting. Our dataset contains eight sites shot from various viewpoints and at different timings using a DSLR camera and a 360° camera to capture the environment map. A colour chequerboard is also captured from both the DSLR and 360° cameras to account for colour calibration (see Figure 4). The dataset has 3240 views captured in 110 different recording sessions. The dataset is publicly available in the Downloads section below.

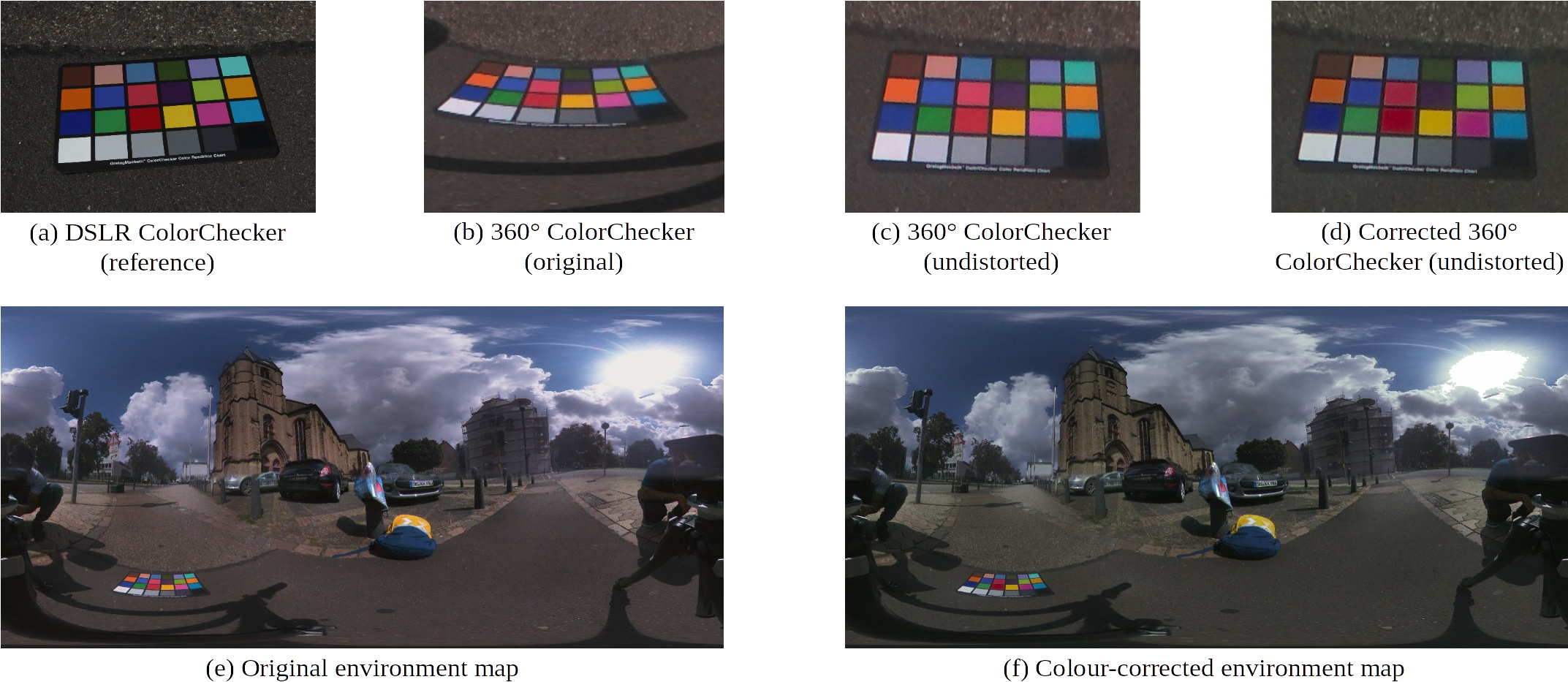

Figure 4: For each recording session in our new benchmark dataset, we capture a colour chequerboard from the DSLR (a) and from the 360° (b) cameras. The chequerboards are used to colour-correct the environment maps with respect to the DSLR (see (e) and (f) for before and after correction). This allows for the first time accurate numerical evaluations of outdoor scene relighting methods on real data against ground truth. Note that (c) shows the original colour chequerboard of (b) being reprojected from the 360° view into the regular view, and its resulting colour-corrected version is shown in (d).

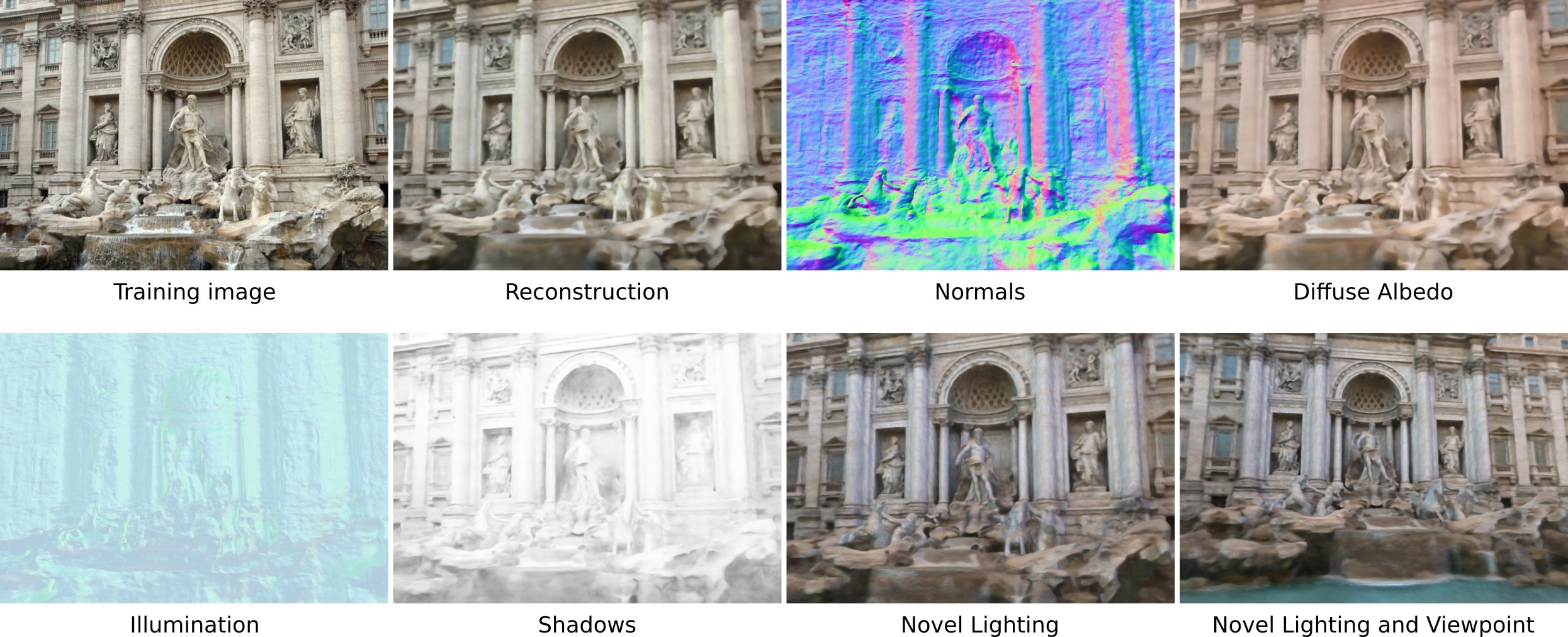

Novel Lighting and Viewpoint

Figure 5a: Site 1 (Ludwigskirche, Saarbrücken).

Figure 5b: Site 2 (Staatstheater, Saarbrücken).

Figure 5c: Site 3 (Landwehrplatz, Saarbrücken).

Figure 5d: Trevi Fountain (Phototourism dataset).

Qualitative Comparison with Yu et al.

Figure 6: We compare our technique against the work of Yu et al. Not only we can produce results of much higher resolution than their method, but they also look significantly more natural.

Qualitative Comparison with NeRF-W (Martin-Brualla, Radwan, Sajjadi et al.)

Figure 7: NeRF-W can only interpolate between different learned appearances. Moreover, it does not allow for semantic control over the appearance. In contrast, our technique decomposes the scene into multiple intrinsics, including albedo, normals, shadows, and illumination. This allows for direct manipulation of the lighting conditions. Moreover, we show that our technique generalises to the conditions impossible in real life by rotating the estimated lighting parameters in the full 360 degrees circle around the fountain.

Quantitative Comparison with Yu et al. and Philip et al.

| Method | PSNR↑ | MSE↓ | MAP↓ |

|---|---|---|---|

| Yu et al. | 18.71 | 0.0138 | 0.0881 |

| Philip et al. (downscaled) | 17.37 | 0.0194 | 0.1046 |

| Ours (downscaled) | 19.86 | 0.0114 | 0.0802 |

| Yu et al. (upscaled) | 17.87 | 0.0167 | 0.0967 |

| Philip et al. | 16.63 | 0.0229 | 0.1131 |

| Ours | 18.72 | 0.0143 | 0.0893 |

| No shadows | 17.82 | 0.0172 | 0.1012 |

| No annealing | 17.16 | 0.0195 | 0.1082 |

| No ray jitter | 18.43 | 0.0150 | 0.0931 |

| No shadow jitter | 18.28 | 0.0155 | 0.0954 |

| No shadow regulariser | 17.62 | 0.0181 | 0.1046 |

Table 1: Quantitative evaluation of the relighting capabilities of different techniques. Here, we report the mean reconstruction error on Site 1 from our dataset. Our technique significantly outperforms related methods (Yu et al., Philip et al.). Bottom: ablation study of our various design choices. Our full model achieves the best result.

Unnatural Illumination

Figure 8: The proposed approach generalises to highly unnatural illuminations as well. This can be leveraged for creation of various visual effects.

Albedo Editing

Figure 9: Another application of our intrinsic decomposition is to edit the scene albedo, without affecting the illumination or shadows. Such application is not possible by style-based methods by any means, e.g., NeRF-W, as they do not perform image decomposition. In this video, we replace the announcement poster in Site 3 with an ECCV 2022 poster. Note how the replaced poster looks natural with the rest of the scene.

Shadow Editing

Figure 10: Another application of our intrinsic decomposition is to edit the shadow strength post-render, without affecting the illumination or albedo. Such application is not possible by style-based methods by any means, e.g., NeRF-W, as they do not perform image decomposition.

Real-time Interactive Rendering in VR

Figure 11: In contrast to style-based methods such as NeRF-W, our rendering is an explicit function of geometry, albedo, shadow, and the lighting conditions (see Eq. 5). Our model provides direct access to albedo and geometry. The lighting and shadows can be generated from the geometry using multiple, potentially non-differentiable, lighting models at render-time. Hence, if we can extract geometry and albedo at sufficient resolution, we can use them without the slow NeRF ray-marching at little to no loss of quality, compared to the original neural model. We extract the geometry and albedo from the learned model of Site 1 as a mesh using Marching Cubes at resolution 10003 voxels. Then we use them in our interactive VR renderer implemented with C++, OpenGL and SteamVR.

Narrated Video with Experiments

Download Video: HD (MP4, 64 MB)

Downloads

Citation

@InProceedings{rudnev2022nerfosr,

title={NeRF for Outdoor Scene Relighting},

author={Viktor Rudnev and Mohamed Elgharib and William Smith and Lingjie Liu and Vladislav Golyanik and Christian Theobalt},

booktitle={European Conference on Computer Vision (ECCV)},

year={2022}

}

Acknowledgments

We thank Christen Millerdurai for the help with the dataset recording. This work was supported by the ERC Consolidator Grant 4DRepLy (770784).

Contact

For questions, clarifications, please get in touch with:Vladislav Golyanik

golyanik@mpi-inf.mpg.de

Mohamed Elgharib

elgharib@mpi-inf.mpg.de

Viktor Rudnev

vrudnev@mpi-inf.mpg.de