State-of-the-Art Report

Dense Monocular Non-Rigid 3D Reconstruction

Abstract

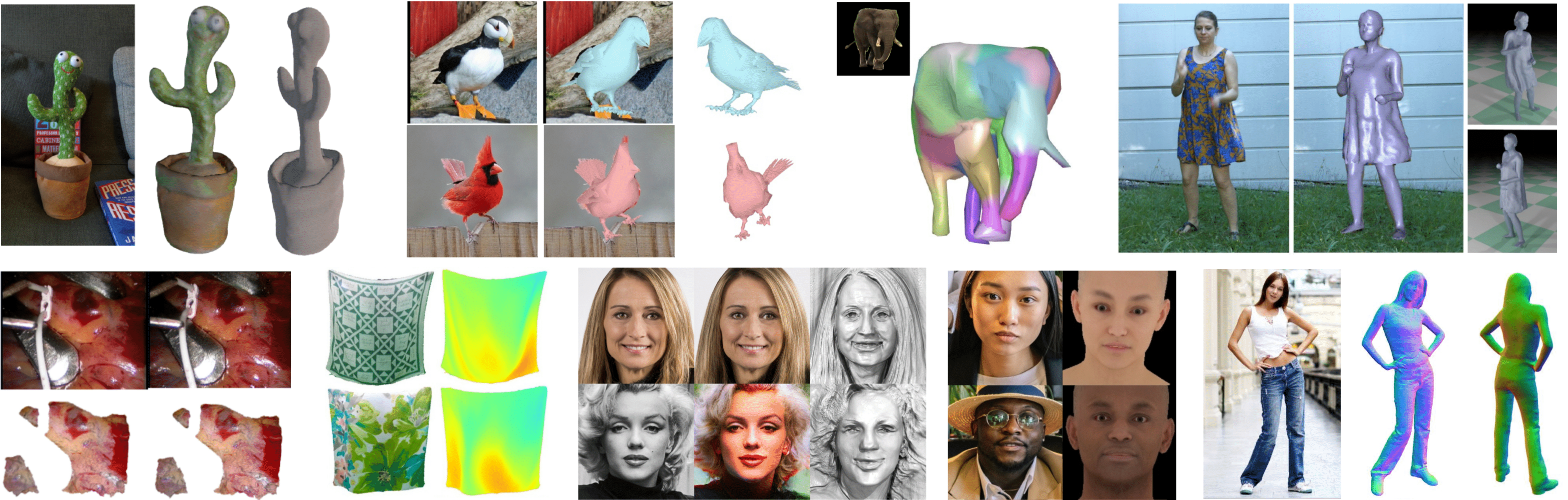

3D reconstruction of deformable (or non-rigid) scenes from a set of monocular 2D image observations is a long-standing and actively researched area of computer vision and graphics. It is an ill-posed inverse problem, since—without additional prior assumptions—it permits infinitely many solutions leading to accurate projection to the input 2D images. Non-rigid reconstruction is a foundational building block for downstream applications like robotics, AR/VR, or visual content creation. The key advantage of using monocular cameras is their omnipresence and availability to the end users as well as their ease of use compared to more sophisticated camera set-ups such as stereo or multi-view systems. This survey focuses on state-of-the-art methods for dense non-rigid 3D reconstruction of various deformable objects and composite scenes from monocular videos or sets of monocular views. It reviews the fundamentals of 3D reconstruction and deformation modeling from 2D image observations. We then start from general methods—that handle arbitrary scenes and make only a few prior assumptions—and proceed towards techniques making stronger assumptions about the observed objects and types of deformations (e.g. human faces, bodies, hands, and animals). A significant part of this STAR is also devoted to classification and a high-level comparison of the methods, as well as an overview of the datasets for training and evaluation of the discussed techniques. We conclude by discussing open challenges in the field and the social aspects associated with the usage of the reviewed methods.

Contents

This survey focuses on methods from recent years for non-rigid 3D reconstruction that take one or several consecutive views from a single camera as input and that output dense 3D reconstructions of the scene in each view or point in time spanning the observations.

First, in the fundamentals section, we describe the basics of non-rigid 3D reconstruction, which covers many aspects that are useful in order to understand any particular work on non-rigid 3D reconstruction. Then the main axis along which we organize our discussion is the object category that is to be reconstructed, structured as follows:

We provide talk recordings for selected parts of this STAR.-

General Objects

-

Shape-from-Template (SfT)

Shape from Template, or template-based reconstruction, comprises monocular non-rigid 3D reconstruction methods that assume a single static shape or template is given as a prior.

-

Non-rigid Structure-from-Motion (NRSfM)

Whereas SfT uses the information present in a single image to deform the template, NRSfM relies on motion and deformation cues for 3D recovery of deformable surfaces and is more generally applicable than SfT. The input to NRSfM are 2D point tracks across multiple images, also called measurements or measurement matrices, and the output is a set of per-view camera-object poses and 3D shapes.

-

Neural Rendering (NeRF-like) Methods

Neural radiance fields have introduced a new area of general dynamic reconstruction methods from a video that do not neatly fall into SfT or NRSfM. Crucially, these methods all combine naïve volumetric rendering and a neural scene parametrization, but differ widely in the specific kinds of input annotation used.

-

Other Methods for Few Scene Reconstruction

There are a number of other reconstruction works that focus on a single or a few scenes but do not fall into any of the previously discussed categories. We group them together here since a per-scene parametrization (i.e. auto-decoding) is still feasible in this problem setting.

-

Methods using Data-driven Prior

When working with large-scale image collections instead of a few scenes at most, per-scene parametrization ceases to be practicable. Methods in this category thus need to rely on a data-driven prior. While this setting has long been common for category-specific methods, it recently turned out to be a viable path for general methods as well.

-

-

Humans

Capturing the deforming 3D surface of humans from a single RGB camera, also called monocular (human) performance capture, has become a very active research area over the last decade. A key difference to general methods is the introduction of human specific priors because the rough shape and topology remain the same irrespective of gender, age, and clothing type.

-

Template-Free Methods

Template-free methods do not assume prior knowledge of the specific 3D geometry.

-

Approaches using Parametric Models

Parametric methods leverage a low-dimensional parametric model (SMPL, SMPL-X, GHUM(L), or imGHUM) of humans obtained by a database of 3D scans of thousands of humans.

-

Template-Based Methods

Template-based methods assume a pre-scanned textured 3D mesh of the clothed person.

-

-

Faces

3D reconstruction of faces from monocular images is a heavily researched topic. In contrast to many other object types, faces have such advantageous properties as symmetry, small deformations and well-defined keypoints that can be exploited in the ill-posed 3D reconstruction setting.

-

Explicit Morphable Models

Classical 3D Morphable Models (3DMMs) are statistical models of face shape and appearance variation with an explicit surface representation. They are built from a (comparably) small set of hundreds of faces and can be used as a prior for non-rigid 3D face reconstruction.

-

Implicit Morphable Models

As compared to mesh-based representations, modern implicit models are not restricted to a fixed topology and, as a result, can model the entire head, including hair. As these methods also model the face appearance using neural networks (which generally have a much better capacity than simple linear models of 3DMMs), they can synthesize photorealistic faces.

-

Specialized Models of Face Parts

Faces are complex, and some facial components that are hard to model with global models are targeted with specific models.

-

-

Hands

Human hands are articulated objects with pose-dependent deformations on a fine scale and cause severe self-occlusions. Methods assume a stronger 3D shape prior, i.e. a statistical parametric hand model covering the entire space of hand shapes to mitigate these challenging self-occlusions and appearance variations.

-

Animals

Interest in animal-centered reconstruction has started growing only recently with the introduction of the SMAL model, a SMPL-style parametric model for quadrupeds learned from 3D scans of animal toys.

-

Emerging Areas

Along with the emphasis on the emerging fields of neural scene representations and neural rendering, we elaborate on current challenges in the field and discuss two nascent but promising future directions: methods using event cameras and physics-aware approaches. We close by providing an overview of the impact that this field has on society.

Extended Version of the Survey

Apart from minor additions throughout, the extended version includes substantial additions to the Fundamentals section and to the Open Challenges. It also discusses Shape from Shading, a class of methods that has turned dormant in recent years.

Citation

@article{TretschkNonRigidSurvey2023,

title = {State of the Art in Dense Monocular Non-Rigid 3D Reconstruction},

author = {Tretschk, Edith and Kairanda, Navami and {B R}, Mallikarjun and Dabral, Rishabh and Kortylewski, Adam and Egger, Bernhard and Habermann, Marc and Fua, Pascal and Theobalt, Christian and Golyanik, Vladislav},

journal = {Computer Graphics Forum (Eurographics State of the Art Reports)},

year = {2023}

}Contact

For questions, clarifications, please get in touch with:Edith Tretschk tretschk@mpi-inf.mpg.de

Navami Kairanda nkairand@mpi-inf.mpg.de

Vladislav Golyanik golyanik@mpi-inf.mpg.de