MoCapDeform: Monocular 3D Human Motion Capture in Deformable Scenes

Abstract

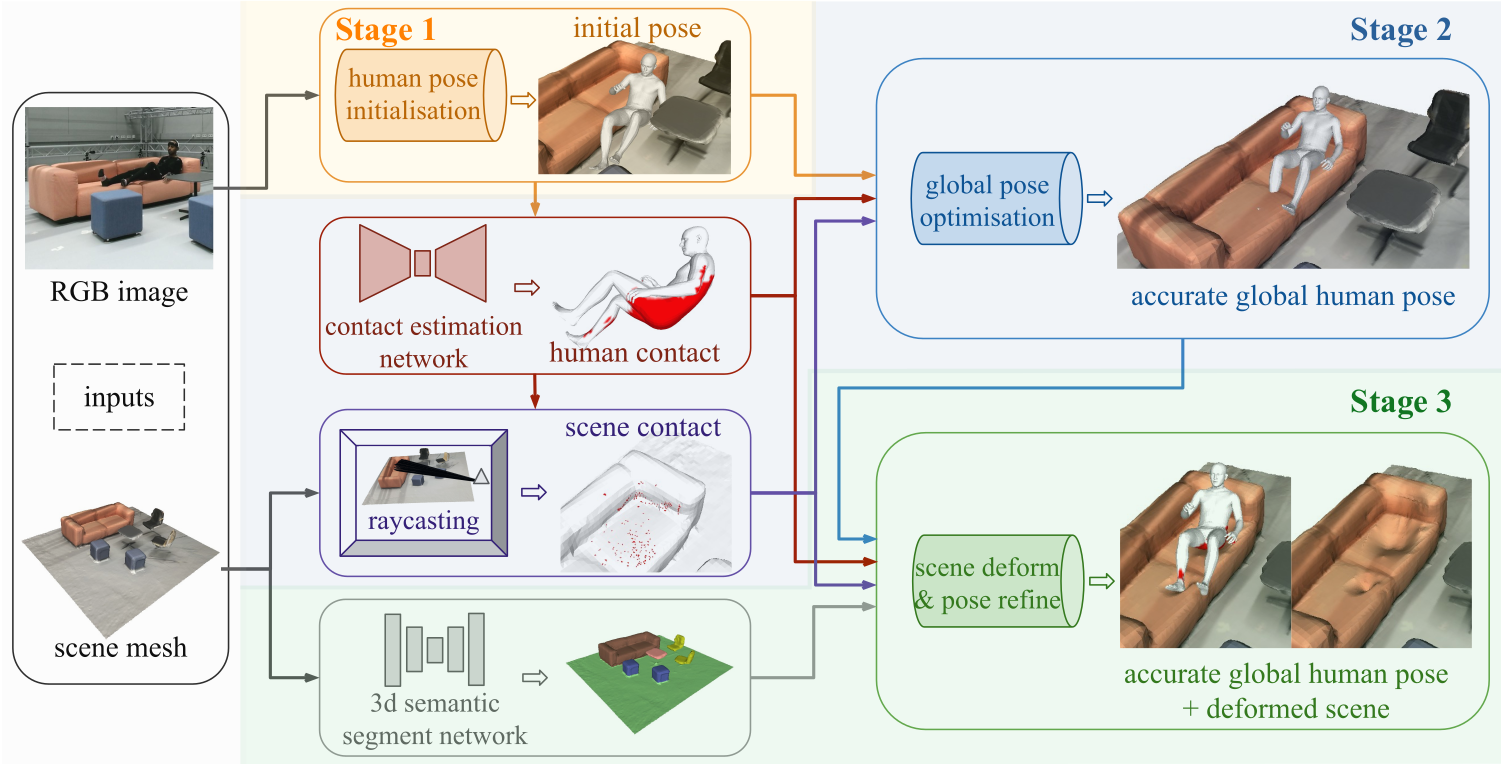

3D human motion capture from monocular RGB images respecting interactions of a subject with complex and possibly deformable environments is a very challenging, ill-posed and under-explored problem. Existing methods address it only weakly and do not model possible surface deformations often occurring when humans interact with scene surfaces. In contrast, this paper proposes MoCapDeform, i.e., a new framework for monocular 3D human motion capture that is the first to explicitly model non-rigid deformations of a 3D scene for improved 3D human pose estimation and deformable environment reconstruction. MoCapDeform accepts a monocular RGB video and a 3D scene mesh aligned in the camera space. It first localises a subject in the input monocular video along with dense contact labels using a new raycasting based strategy. Next, our human-environment interaction constraints are leveraged to jointly optimise global 3D human poses and non-rigid surface deformations. MoCapDeform achieves superior accuracy than competing methods on several datasets, including our newly recorded one with deforming background scenes.

Experimental Results

Comparisons: MoCapDeform (ours) vs PROX (Hassan et al., 2019)

Existing Methods (PROX, Hassan et al., 2019)

MoCapDeform (ours)

MoCapDeform Dataset

Comparisons on MoCapDeform (MCD) Dataset

Bean bag (error map)

Downloads

Citation

@inproceedings{Li_3DV2022,

title={MoCapDeform: Monocular 3D Human Motion Capture in Deformable Scenes},

author={Zhi Li and Soshi Shimada and Bernt Schiele and Christian Theobalt and Vladislav Golyanik},

booktitle = {International Conference on 3D Vision (3DV)},

year={2022}

}

Contact

For questions, clarifications, please get in touch with:Zhi Li

zhili@mpi-inf.mpg.de

Soshi Shimada

sshimada@mpi-inf.mpg.de

Vladislav Golyanik

golyanik@mpi-inf.mpg.de