Φ-SfT: Shape-from-Template with a Physics-Based Deformation Model

Abstract

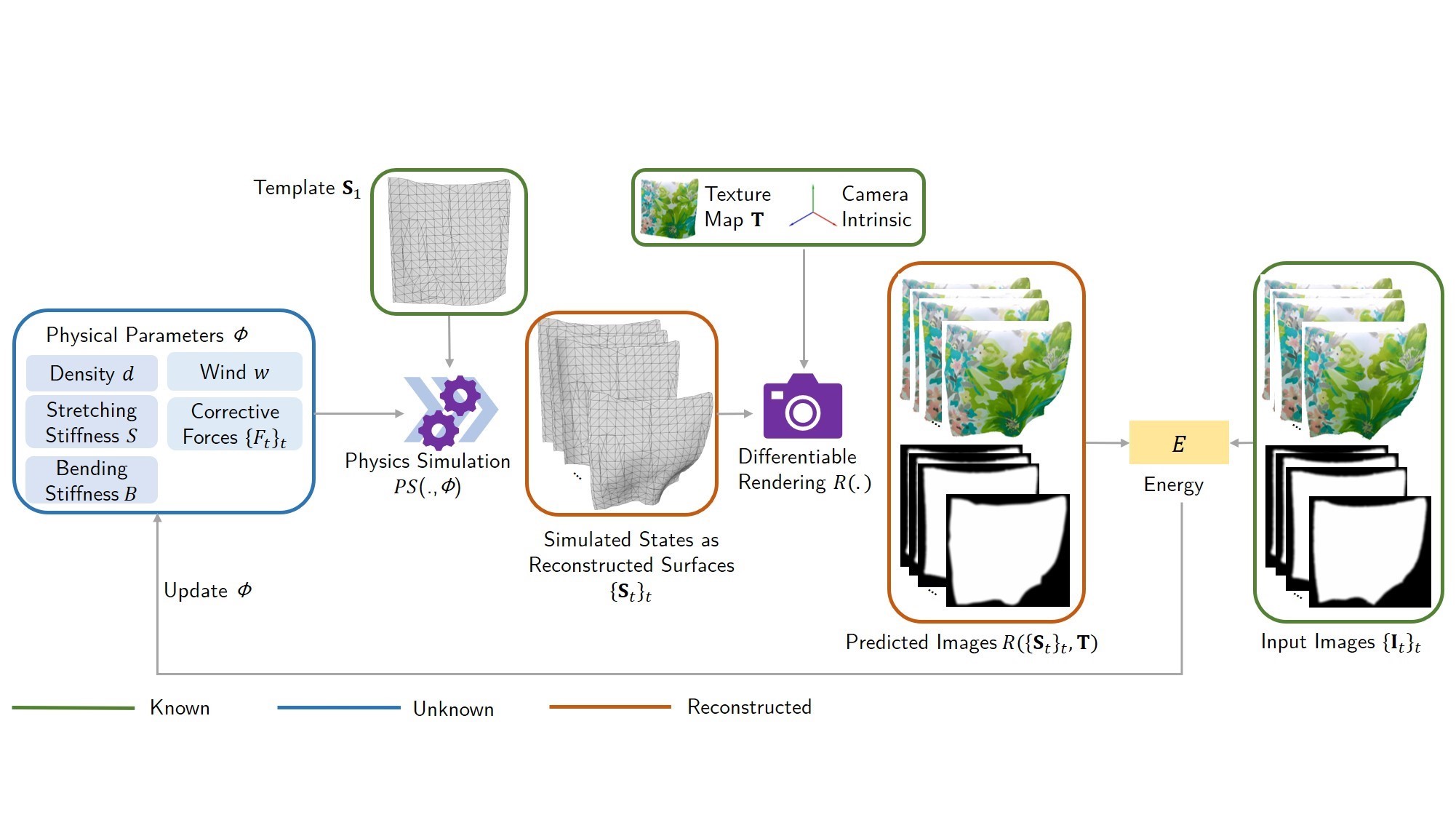

Shape-from-Template (SfT) methods estimate 3D surface deformations from a single monocular RGB camera while assuming a 3D state known in advance (a template). This is an important yet challenging problem due to the underconstrained nature of the monocular setting. Existing SfT techniques predominantly use geometric and simplified deformation models, which often limits their reconstruction abilities. In contrast to previous works, this paper proposes a new SfT approach explaining 2D observations through physical simulations accounting for forces and material properties. Our differentiable physics simulator regularises the surface evolution and optimises the material elastic properties such as bending coefficients, stretching stiffness and density. We use a differentiable renderer to minimise the dense reprojection error between the estimated 3D states and the input images and recover the deformation parameters using an adaptive gradient-based optimisation. For the evaluation, we record with an RGB-D camera challenging real surfaces exposed to physical forces with various material properties and textures. Our approach significantly reduces the 3D reconstruction error compared to multiple competing methods.

Method

Figure 2: Given a sequence of monocular input images {It}t, a template at the rest position S1 and the corresponding texture map T, our technique solves for the unknown physical parameters Φ that describe the deforming 3D surface {St}t. We optimise for the per-sequence physical parameters of {d, S, B, w} as well as the per-frame corrective forces {Ft}t in a gradient-based manner. We utilise (1) a physics-based differentiable simulator PS for reconstructing meshes with a physical deformation model and (2) a differentiable renderer R for projecting the reconstructions into image space, which allows us to define a reprojection error over all pixels (instead of vertices) during optimisation. The differentiable nature of both components enables us to back-propagate the gradients of the total energy E all the way back to the unknown physics parameters.

Dataset

Figure 3: We record a new φ-SfT real dataset of nine sequences with reference depth data to facilitate quantitative comparisons of monocular 3D surface reconstruction methods. Our setup consists of a synchronised RGB camera and an Azure Kinect. The depth camera provides depth maps serving as pseudo ground truth.

Φ-SfT Reconstructions: Input and Novel View

Φ-SfT Reconstructions: Depth Map Visualisation

Comparison: Shape-from-Template Methods

Comparison: Non-Rigid Structure-from-Motion Methods

Downloads

Citation

@inproceedings{kair2022sft,

title={$\phi$-SfT: Shape-from-Template with a Physics-Based Deformation Model},

author={Navami Kairanda and Edith Tretschk and Mohamed Elgharib and Christian Theobalt and Vladislav Golyanik},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

Contact

For questions, clarifications, please get in touch with:Navami Kairanda

nkairand@mpi-inf.mpg.de

Vladislav Golyanik

golyanik@mpi-inf.mpg.de

Edith Tretschk

tretschk@mpi-inf.mpg.de